Leading AI Models for Healthcare: AI Patient Chart Review for Clinics and the Top Open Source Medical LLMs of 2024

AI patient chart review for clinics is revolutionizing how healthcare professionals manage and analyze patient data, streamlining workflows and improving the accuracy of clinical decision-making. In an era where technology continues to redefine the boundaries of healthcare, Artificial Intelligence (AI) emerges as a pivotal force in transforming clinical operations and patient care. The year 2024 marks a significant advancement in the integration of AI into healthcare systems, particularly through the use of open-source Large Language Models (LLMs). These AI models are not just tools; they are revolutionary assets that enable healthcare professionals to achieve greater efficiency and accuracy. From synthesizing complex patient data to enhancing real-time decision-making, the potential of AI in healthcare is immense and ever-expanding.

The power of AI extends into the critical realm of clinician workflows, where precision and efficiency are paramount. AI patient chart review for clinics enables seamless integration of patient data, automating processes and ensuring higher levels of care. AI patient chart review for clinics, powered by open-source LLMs, offers a unique advantage with customizable, scalable solutions tailored to the specific needs of healthcare facilities. AI patient chart review for clinics enables a seamless flow of information, transforming multimodal data—including electronic health records, audio conversations, and images—into actionable insights for clinicians. Through AI patient chart review for clinics, AI models automate and optimize clinical workflows, allowing healthcare providers to focus more on delivering top-notch patient care.

Table of Contents

LLMs for Clinical Workflow Automation

Introduction to LLMs and Their Role in Healthcare

Large Language Models (LLMs) stand out among AI technologies for their ability to understand and generate human-like text, making them particularly valuable in healthcare settings. These advanced AI models can process and analyse extensive unstructured data from various sources, such as electronic health records (EHRs), doctor-patient conversation recordings, and medical imaging. By integrating LLMs, healthcare providers can harness these data streams to enhance diagnosis, treatment planning, and patient care, all while ensuring a higher degree of precision and efficiency.

AI patient chart review for clinics powered by LLMs goes beyond data processing, decoding the complexities of medical language and patient information for more effective clinical decision-making. This capability is crucial for extracting actionable insights from EHRs, which are often laden with unstructured text that can be challenging to navigate. AI patient chart review for clinics automates data extraction and interpretation, reducing cognitive load on clinicians and freeing them up to focus more on patient care. One such research has been done by researchers at the Stanford recently that can be found here: https://www.nature.com/articles/s41591-024-02855-5

Benefits of Automating Clinician Workflows with AI

AI patient chart review for clinics powered by LLMs significantly reduces clinician burnout by automating tedious tasks like chart reviews and analysis. Healthcare professionals frequently face the daunting task of sifting through overwhelming amounts of data, which can lead to fatigue and reduce the quality of patient care. AI-driven tools like LLMs can automate tasks such as manual chart reviews, analysis, and even preliminary diagnosis, thereby freeing up clinicians’ time and reducing their cognitive burden.

With AI patient chart review for clinics, the automation extends to interpreting doctor-patient interactions, providing clinicians with detailed insights for better patient care. AI models can transcribe, translate, and analyse spoken content, providing clinicians with succinct synopses and relevant medical insights derived from conversations. This not only improves the accuracy of medical records but also enhances the understanding of patient concerns and conditions, leading to better-informed decision-making.

Top 5 Open Source LLMs in Healthcare

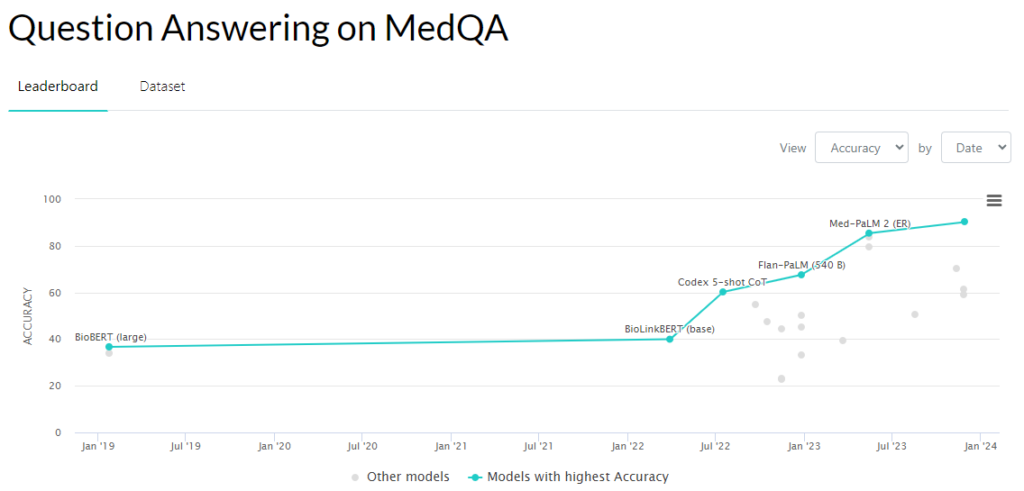

Among the myriad of applications, one significant benchmark for evaluating medical specific LLMs is their performance in “Question Answering on MedQA”. This involves using LLMs to interpret and answer complex medical questions. Our exploration includes models that have shown promising results in academic and practical applications, similar to the research and development conducted by Sporo Health, which emphasizes automating clinical workflows and enhancing data-driven decisions.

Meditron 70B: A Pioneering AI in Medical Reasoning

Meditron 70B represents the forefront of AI-driven healthcare solutions, specifically designed to tackle the complexities of medical data and reasoning. As a standout in the suite of open-source Large Language Models (LLMs), Meditron 70B boasts an impressive 70 billion parameters, underscoring its capability to process and analyse extensive medical data. AI patient chart review for clinics, such as Meditron 70B, is the result of pretraining on diverse medical data, enhancing its ability to process and analyze complex patient information. This corpus includes not just selected PubMed articles and abstracts, but also incorporates a new dataset of internationally-recognized medical guidelines, and general domain data from RedPajama-v1.

The refinement and specialization of Meditron 70B have been meticulously directed towards enhancing its applicability and effectiveness in the medical field. By finetuning on relevant training data, Meditron 70B significantly outperforms predecessors and contemporaries like Llama-2-70B, GPT-3.5 (text-davinci-003, 8-shot), and Flan-PaLM across a variety of medical reasoning tasks.

Find link here: https://paperswithcode.com/paper/meditron-70b-scaling-medical-pretraining-for

BioMistral 7B: Advancing Biomedical Insights with AI

BioMistral 7B emerges as a cutting-edge solution within the sphere of Large Language Models (LLMs) tailored specifically for the biomedical domain. Built upon the robust foundation of the general-purpose Mistral model, BioMistral has been meticulously pre-trained on an extensive collection from PubMed Central, enhancing its relevance and efficacy in medical contexts. This specialized training equips BioMistral to adeptly handle a wide array of biomedical data, effectively translating complex medical information into actionable insights, which is crucial for clinical decision-making and advancing medical research.

AI patient chart review for clinics using models like BioMistral 7B excels in medical question-answering tasks, surpassing existing models in both accuracy and reliability. Furthermore, the model’s capability extends beyond English, as it has been evaluated in seven additional languages, marking a significant stride in multilingual medical LLM applications. This extensive testing underscores BioMistral’s potential to transform healthcare outcomes globally by providing consistent and reliable AI-powered insights across diverse linguistic landscapes.

Find the link here: https://huggingface.co/BioMistral/BioMistral-7B

MedAlpaca 7B: Enhancing Medical Dialogue with AI

MedAlpaca 7B is a specialized Large Language Model (LLM) with 7 billion parameters, fine-tuned specifically for the medical domain. Originating from the foundational LLaMA architecture, MedAlpaca is meticulously engineered to excel in question-answering and medical dialogue tasks. This model stands as a pivotal tool in healthcare AI, facilitating more efficient and accurate exchanges between medical professionals and AI systems, and improving the accessibility and quality of information available for patient care and decision support.

The training regimen for MedAlpaca 7B is extensive and diverse, incorporating multiple data sources to refine its capabilities. Utilizing Anki flashcards for generating medical questions, Wikidoc for creating question-answer pairs, and StackExchange to mine high-quality interactions across various health-related categories, MedAlpaca’s training is robust. This model also incorporates a significant dataset from ChatDoctor, consisting of 200,000 question-answer pairs, which further enhances its precision and factual accuracy in medical dialogues. Through these diverse training inputs, MedAlpaca 7B is poised to significantly contribute to AI’s role in transforming medical communication and information retrieval.

Find the link here: https://huggingface.co/medalpaca/medalpaca-7b

BioMedGPT: Bridging Biological Modalities with AI in Biomedicine

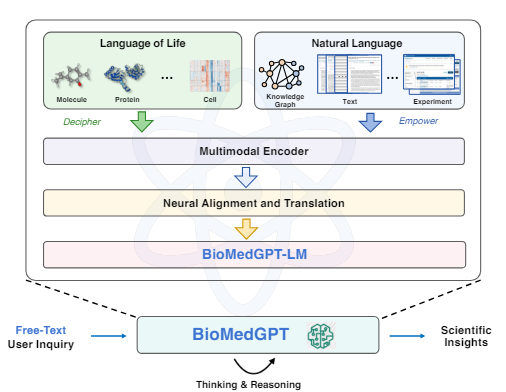

BioMedGPT represents a groundbreaking advancement in the field of biomedicine through its innovative use of multimodal generative pre-trained transformers (GPT). As a pioneering model in this sector, BioMedGPT is designed to seamlessly bridge the gap between complex biological modalities—such as molecules, proteins, and cells—and human natural language. This capability enables users to interact with the “language of life” using free text, facilitating a unique and effective communication channel within biomedical research and practice. By aligning different biological modalities with natural language, BioMedGPT enhances the accessibility and interpretability of biomedical data, paving the way for more intuitive and productive scientific exploration.

BioMedGPT-10B, a specific iteration of this model, unifies the feature spaces of molecules, proteins, and natural language, enabling precise encoding and alignment. This model has demonstrated impressive performance, matching or surpassing both human experts and larger general-purpose models in biomedical question-answering tasks. BioMedGPT’s capabilities extend to specialized areas such as molecule and protein QA, which are critical for accelerating drug discovery and the identification of new therapeutic targets. The model and its resources, including the specialized PubChemQA and UniProtQA datasets, are open-sourced, making them accessible for further research and development in the community, reflecting a significant step forward in the integration of AI into biomedicine.

Find the link here: https://paperswithcode.com/paper/biomedgpt-open-multimodal-generative-pre

MedPaxTral-2x7b: A Synergistic Approach to Medical LLMs

MedPaxTral-2x7b stands as a testament to the innovative strides in medical AI, representing a sophisticated Mixture of Experts (MoEs) approach by integrating the strengths of three leading models: BioMistral, Meditron, and MedAlpaca. Developed using the MergeKit library, this model exemplifies cutting-edge technology in seamlessly merging multiple AI models to enhance their individual capabilities into a single, robust Large Language Model. This amalgamation not only increases the model’s efficiency and effectiveness in processing and analyzing medical data but also significantly advances the capabilities for automating clinician workflows.

MedPaxTral-2x7b, a research-based initiative from Sporo Health, underscores the organization’s commitment to leveraging in-house developed medical LLMs to optimize healthcare operations and support clinical decision-making, reflecting a profound dedication to improving healthcare delivery through advanced AI technologies.

Conclusion

AI patient chart review for clinics is rapidly becoming a cornerstone of modern healthcare, offering unprecedented opportunities for improving patient care and operational efficiency. By utilizing AI patient chart review for clinics, healthcare providers can automate the tedious and error-prone task of reviewing patient records, freeing up clinicians to focus on more critical aspects of patient care.

This process relies heavily on AI patient chart review for clinics, which integrates data from various sources—such as electronic health records, diagnostic reports, and physician notes—into a unified platform. The ability to leverage AI patient chart review for clinics enables healthcare teams to access accurate, timely insights, driving better clinical outcomes. As the adoption of AI patient chart review for clinics continues to rise, it promises to become an integral tool in transforming healthcare systems, reducing administrative burdens, and enhancing patient satisfaction across the board.

Selecting the right Large Language Model (LLM) for healthcare applications involves a careful consideration of various factors such as specific use cases, model alignment with clinical needs, and the practicality of integrating these technologies into existing systems. Effective implementation often requires trial and error experimentation to ensure that the models not only fit the theoretical requirements but also perform effectively in real-world scenarios. At Sporo Health, we prioritize the meticulous selection and alignment of LLMs to meet the nuanced demands of the medical industry. By fine-tuning these models for specific downstream tasks, we aim to maximize their efficiency and applicability in enhancing clinical workflows and improving patient outcomes.

AI patient chart review for clinics is reshaping healthcare systems by simplifying the management of patient data and enhancing clinical decision-making. This transformative technology uses AI-powered tools to automate the analysis of medical records, enabling healthcare providers to streamline workflows and focus more on patient care. By utilizing AI patient chart review for clinics, healthcare professionals can quickly access accurate, data-driven insights, ultimately improving the quality of care delivered to patients. The integration of AI patient chart review for clinics is crucial in the modern healthcare environment, where data overload can hinder timely and effective decision-making.

The use of AI in patient chart reviews not only saves time but also helps reduce the chances of human error in interpreting complex medical information. AI patient chart review for clinics integrates large datasets, including electronic health records, medical images, and audio from doctor-patient conversations, transforming them into useful insights. This continuous automation of clinical workflows through AI allows clinicians to provide more efficient care, ensuring that patient data is handled with precision and timeliness. As AI patient chart review for clinics continues to evolve, the potential to enhance both the efficiency and effectiveness of clinical operations becomes more evident, offering a promising future for healthcare delivery.

For healthcare organizations looking to leverage the latest advancements in AI for improved healthcare delivery, Sporo Health offers tailored solutions that are at the forefront of medical technology. We invite you to book a demo today to explore how our specialized LLMs can transform your clinical operations and help you achieve optimal results. Reach out to us for a quick demonstration, and see first-hand the potential of these powerful tools in revolutionizing the healthcare landscape.

[…] For more information on healthcare AI models, check out our post on them here. […]

[…] For more information on various open source models, see our article on medical LLMs. […]

Rattling wonderful information can be found on web blog.Raise blog range

Hey there! Do you know if they make any plugins to help with Search

Engine Optimization? I’m trying to get my website

to rank for some targeted keywords but I’m not seeing very good

success. If you know of any please share. Thanks!

You can read similar text here: Blankets

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Can you be more specific about the content of your article? After reading it, I still have some doubts. Hope you can help me.

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Hey there! Do you know if they make any plugins to

assist with Search Engine Optimization? I’m trying to get my website to rank

for some targeted keywords but I’m not seeing

very good gains. If you know of any please share.

Appreciate it! You can read similar art here: Your destiny

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

I am really impressed along with your writing abilities and also with the layout for your weblog. Is this a paid subject or did you customize it yourself? Either way stay up the excellent high quality writing, it’s uncommon to look a great weblog like this one today. I like sporohealth.com ! It is my: Lemlist

I am extremely inspired with your writing talents as well as with the format to your blog. Is that this a paid subject matter or did you customize it yourself? Either way keep up the nice high quality writing, it is rare to see a great blog like this one nowadays. I like sporohealth.com ! It’s my: Stan Store

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

Your article helped me a lot, is there any more related content? Thanks!

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

маркетплейс для реселлеров https://birzha-akkauntov-online.ru/

маркетплейс для реселлеров https://marketplace-akkauntov-top.ru/

купить аккаунт https://magazin-akkauntov-online.ru/

площадка для продажи аккаунтов https://ploshadka-prodazha-akkauntov.ru/

маркетплейс аккаунтов биржа аккаунтов

гарантия при продаже аккаунтов https://kupit-akkaunt-top.ru/

продать аккаунт https://pokupka-akkauntov-online.ru/

Account Trading Service Marketplace for Ready-Made Accounts

Buy Pre-made Account Account Trading Service

Database of Accounts for Sale Buy Pre-made Account

Account Selling Platform Account Store

Accounts marketplace Accounts for Sale

Sell Pre-made Account Accounts market

Account Trading Service Account Catalog

Ready-Made Accounts for Sale Sell Pre-made Account

Online Account Store Account Selling Platform

Gaming account marketplace Account trading platform

Buy Account Website for Buying Accounts

purchase ready-made accounts account exchange

buy pre-made account account selling platform

account market account acquisition

buy and sell accounts sell accounts

account selling service database of accounts for sale

website for buying accounts profitable account sales

secure account sales account market

ready-made accounts for sale account exchange

account selling platform buy accounts

accounts market accounts for sale

account sale sell account

Just here to join conversations, exchange ideas, and gain fresh perspectives throughout the journey.

I enjoy understanding different opinions and adding to the conversation when possible. Interested in hearing fresh thoughts and meeting like-minded people.

Here’s my site:https://automisto24.com.ua/

sell account marketplace for ready-made accounts

account selling platform sell accounts

secure account purchasing platform account purchase

account exchange service sell account

account market account sale

accounts market secure account sales

Happy to dive into discussions, share thoughts, and gain fresh perspectives as I go.

I’m interested in learning from different perspectives and adding to the conversation when possible. Interested in hearing new ideas and connecting with others.

Here’s my web-site-https://automisto24.com.ua/

account buying platform accounts marketplace

verified accounts for sale gaming account marketplace

accounts market accounts for sale

Your article helped me a lot, is there any more related content? Thanks!

buy and sell accounts account trading platform

accounts for sale secure account purchasing platform

sell accounts account trading service

sell accounts account store

account acquisition accounts marketplace

account purchase database of accounts for sale

account catalog social media account marketplace

account sale guaranteed accounts

account exchange service https://accounts-offer.org/

accounts market https://accounts-marketplace.xyz

account trading https://buy-best-accounts.org

account acquisition https://social-accounts-marketplaces.live

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me?

Your point of view caught my eye and was very interesting. Thanks. I have a question for you.

find accounts for sale https://accounts-marketplace.live

marketplace for ready-made accounts https://social-accounts-marketplace.xyz

account market account market

Your article helped me a lot, is there any more related content? Thanks!

marketplace for ready-made accounts https://buy-accounts-shop.pro

secure account purchasing platform https://social-accounts-marketplace.live

account catalog https://buy-accounts.live

account market https://accounts-marketplace.online

Your article helped me a lot, is there any more related content? Thanks!

account purchase https://accounts-marketplace-best.pro

маркетплейс аккаунтов соцсетей https://akkaunty-na-prodazhu.pro

продать аккаунт https://rynok-akkauntov.top/

площадка для продажи аккаунтов маркетплейсов аккаунтов

маркетплейс аккаунтов соцсетей https://akkaunt-magazin.online

магазин аккаунтов https://akkaunty-market.live

продажа аккаунтов kupit-akkaunty-market.xyz

магазин аккаунтов https://akkaunty-optom.live/

покупка аккаунтов https://online-akkaunty-magazin.xyz/

маркетплейс аккаунтов соцсетей akkaunty-dlya-prodazhi.pro

площадка для продажи аккаунтов https://kupit-akkaunt.online

cheap facebook accounts https://buy-adsaccounts.work

buy ad account facebook https://buy-ad-accounts.click

cheap facebook account https://buy-ad-account.top

buy aged facebook ads accounts https://buy-ads-account.click

buy ad account facebook https://ad-account-buy.top/

buy aged facebook ads accounts https://buy-ads-account.work/

buying facebook ad account https://ad-account-for-sale.top

buying fb accounts https://buy-ad-account.click

Этот информационный обзор станет отличным путеводителем по актуальным темам, объединяющим важные факты и мнения экспертов. Мы исследуем ключевые идеи и представляем их в доступной форме для более глубокого понимания. Читайте, чтобы оставаться в курсе событий!

Изучить вопрос глубже – https://medalkoblog.ru/

buy accounts facebook buy aged fb account

buy google ads invoice account https://buy-ads-account.top

buy google ad threshold account https://buy-ads-accounts.click

buy facebook account https://buy-accounts.click/

buy google ads agency account https://ads-account-for-sale.top

sell google ads account https://ads-account-buy.work

buy google ads verified account google ads account for sale

buy google ads threshold account https://buy-account-ads.work

buy google ads threshold accounts https://buy-ads-agency-account.top/

buy adwords account https://sell-ads-account.click

buy old google ads account https://ads-agency-account-buy.click

unlimited bm facebook buy-business-manager.org

google ads account for sale https://buy-verified-ads-account.work

buy verified facebook business manager https://buy-bm-account.org/

buy facebook business manager verified buy-business-manager-acc.org

buy facebook business manager account https://buy-verified-business-manager-account.org/

verified bm for sale buy verified business manager facebook

Эта статья сочетает познавательный и занимательный контент, что делает ее идеальной для любителей глубоких исследований. Мы рассмотрим увлекательные аспекты различных тем и предоставим вам новые знания, которые могут оказаться полезными в будущем.

Выяснить больше – https://slnc.in/the-number-1-secret-of-success

В этом информативном обзоре собраны самые интересные статистические данные и факты, которые помогут лучше понять текущие тренды. Мы представим вам цифры и графики, которые иллюстрируют, как развиваются различные сферы жизни. Эта информация станет отличной основой для глубокого анализа и принятия обоснованных решений.

Разобраться лучше – https://www.jvassurancesconseils.com/obstetrics-and-gynaecology

Симптоматика ломки может варьироваться: от бессонницы, сильной тревожности и раздражительности до выраженных физически болезненных ощущений, таких как мышечные спазмы, судороги, потливость, головокружение и тошнота. В критических ситуациях, когда симптомы достигают остроты, своевременная медицинская помощь становится жизненно необходимой для предотвращения осложнений и стабилизации состояния пациента.

Изучить вопрос глубже – http://snyatie-lomki-novosibirsk8.ru

Стационарная программа позволяет стабилизировать не только физическое состояние, но и эмоциональную сферу. Находясь в изоляции от внешних раздражителей и вредных контактов, пациент получает шанс сконцентрироваться на себе и начать реабилитацию без давления извне.

Подробнее тут – http://narkologicheskaya-pomoshch-balashiha1.ru

Назначение и действие

Получить больше информации – врач нарколог на дом нижний новгород

Функция

Изучить вопрос глубже – снятие ломки на дому недорого нижний новгород

Ломка – это тяжелый синдром отмены, возникающий после длительного употребления алкоголя или наркотических веществ. При резком прекращении их приема нервная система и другие органы начинают страдать от недостатка необходимых веществ, что приводит к сильному дискомфорту, психоэмоциональным нарушениям и ухудшению общего состояния организма. В Новосибирске наркологическая клиника «Возрождение» оказывает экстренную помощь при снятии ломки, обеспечивая безопасное, круглосуточное и конфиденциальное лечение под наблюдением опытных специалистов.

Выяснить больше – снятие ломки нарколог новосибирск

Постановка капельницы от запоя проводится при наличии следующих клинических симптомов, свидетельствующих о критическом состоянии организма:

Изучить вопрос глубже – http://

Абстинентный синдром — одно из самых тяжёлых и опасных проявлений наркотической зависимости. Он развивается на фоне резкого отказа от приёма веществ и сопровождается сильнейшими нарушениями работы организма. Это состояние требует немедленного вмешательства. Самостоятельно справиться с ним невозможно — особенно если речь идёт о героине, метадоне, синтетических наркотиках или длительной зависимости. В клинике «НаркоПрофи» мы организовали систему снятия ломки в Подольске, работающую круглосуточно: как на дому, так и в условиях стационара.

Подробнее тут – снятие ломки на дому недорого

Наркологическая клиника «Эдельвейс» в Екатеринбурге специализируется на оказании оперативной и квалифицированной помощи при снятии ломки. Наши специалисты обладают многолетним опытом работы и применяют современные методики для безопасного и эффективного лечения абстинентного синдрома. Мы работаем круглосуточно, что позволяет оказывать помощь в любое время суток, обеспечивая анонимность и конфиденциальность каждого пациента.

Подробнее – снять ломку екатеринбург

Функция

Подробнее можно узнать тут – снятие наркологической ломки в нижний новгороде

Как отмечает главный врач клиники, кандидат медицинских наук Сергей Иванов, «мы создали систему, при которой пациент получает помощь в течение часа — независимо от дня недели и времени суток. Это принципиально меняет шансы на выздоровление».

Подробнее – вызвать наркологическую помощь

При наличии этих симптомов организм находится в критическом состоянии, и любой промедление с вызовом врача может привести к развитию серьезных осложнений, таких как сердечно-сосудистые нарушения, тяжелые неврологические симптомы или даже жизнеугрожающие состояния. Экстренное вмешательство позволяет не только снять острые симптомы ломки, но и предотвратить необратимые изменения в организме.

Узнать больше – http://snyatie-lomki-novosibirsk8.ru

buy facebook business manager https://business-manager-for-sale.org

Задача врачей — не просто облегчить симптомы, а купировать осложнения, стабилизировать жизненно важные функции, вернуть пациенту способность к дальнейшему лечению. Мы работаем быстро, анонимно и профессионально. Любой человек, оказавшийся в кризисе, может получить помощь в течение часа после обращения.

Получить дополнительные сведения – http://www.domen.ru

Наркологическая клиника «Эдельвейс» в Екатеринбурге специализируется на оказании оперативной и квалифицированной помощи при снятии ломки. Наши специалисты обладают многолетним опытом работы и применяют современные методики для безопасного и эффективного лечения абстинентного синдрома. Мы работаем круглосуточно, что позволяет оказывать помощь в любое время суток, обеспечивая анонимность и конфиденциальность каждого пациента.

Изучить вопрос глубже – снятие ломки на дому екатеринбург.

Алкогольный запой – это критическое состояние, возникающее при длительном злоупотреблении спиртными напитками, когда организм насыщается токсинами и его жизненно важные системы (сердечно-сосудистая, печёночная, нервная) начинают давать сбой. В такой ситуации необходимо незамедлительное вмешательство специалистов для предотвращения тяжелых осложнений и спасения жизни. Наркологическая клиника «Основа» в Новосибирске предоставляет экстренную помощь с помощью установки капельницы от запоя, позволяющей оперативно вывести токсины из организма, стабилизировать внутренние процессы и создать условия для последующего качественного восстановления.

Исследовать вопрос подробнее – https://kapelnica-ot-zapoya-novosibirsk8.ru/kapelnica-ot-zapoya-vyzov-v-novosibirske/

Наши специалисты оказывают экстренную помощь по четко отработанной методике, главная задача которой – оперативное снятие симптомов острой интоксикации и абстинентного синдрома, восстановление работы внутренних органов и создание оптимальных условий для последующей реабилитации. Опытный нарколог на дому проведет тщательную диагностику, составит индивидуальный план лечения и даст необходимые рекомендации по дальнейшему выздоровлению.

Подробнее тут – вызов нарколога на дом в нижний новгороде

buy business manager buy-bm.org

Одной из самых сильных сторон нашей клиники является оперативность. Мы понимаем, что при алкоголизме, наркомании и лекарственной зависимости часто требуются немедленные действия. Если человек находится в состоянии запоя, абстиненции или передозировки, промедление может привести к тяжёлым осложнениям или даже смерти.

Углубиться в тему – круглосуточная наркологическая помощь в балашихе

Процесс лечения включает несколько ключевых этапов, каждый из которых имеет решающее значение для восстановления организма:

Получить больше информации – snyatie lomki na domu novosibirsk

Процесс лечения включает несколько ключевых этапов, каждый из которых имеет решающее значение для восстановления организма:

Узнать больше – снятие ломки наркомана

Клиника «Возрождение» применяет комплексный подход к снятию ломки, используя современные детоксикационные методики и проверенные препараты. Приведенная ниже таблица демонстрирует основные группы медикаментов, используемых в терапии, и их назначение:

Подробнее тут – снятие ломки на дому цена новосибирск

Клиника «НаркоМед Плюс» использует комплексный подход для эффективного снятия симптомов ломки с применением современных методов детоксикации и поддержки организма. Основные группы препаратов включают:

Подробнее можно узнать тут – снять ломку

Наркологическая клиника «НаркоМед Плюс» в Нижнем Новгороде оказывает экстренную помощь при снятии ломки. Наша команда высококвалифицированных специалистов готова круглосуточно выехать на дом или принять пациента в клинике, обеспечивая оперативное, безопасное и полностью конфиденциальное лечение. Мы разрабатываем индивидуальные программы терапии, учитывая историю зависимости и текущее состояние каждого пациента, что позволяет быстро стабилизировать его состояние и начать процесс полного выздоровления.

Подробнее – ломка от наркотиков в нижний новгороде

buy facebook business account https://verified-business-manager-for-sale.org/

Поэтому наша служба экстренного выезда работает круглосуточно. Медицинская бригада приезжает на вызов в любой район Балашихи в течение часа. Пациенту ставят капельницы, стабилизируют давление, снимают судорожный синдром и устраняют тревожность. Всё это проходит под наблюдением опытных врачей, которые ежедневно сталкиваются с острыми ситуациями и знают, как действовать быстро и безопасно.

Подробнее можно узнать тут – вызвать наркологическую помощь

Задача врачей — не просто облегчить симптомы, а купировать осложнения, стабилизировать жизненно важные функции, вернуть пациенту способность к дальнейшему лечению. Мы работаем быстро, анонимно и профессионально. Любой человек, оказавшийся в кризисе, может получить помощь в течение часа после обращения.

Исследовать вопрос подробнее – https://snyatie-lomki-podolsk1.ru/snyatie-lomki-na-domu-v-podolske

Лечение ломки в клинике «Эдельвейс» проходит по четко отлаженной схеме, которая учитывает индивидуальные особенности каждого пациента. После первоначального осмотра и сбора анамнеза составляется персональный план терапии, цель которого – как можно быстрее снять симптомы ломки и стабилизировать состояние пациента. Ниже приведен поэтапный алгоритм лечения:

Детальнее – http://snyatie-lomki-ekb8.ru

Процесс лечения включает несколько ключевых этапов, каждый из которых имеет решающее значение для восстановления организма:

Узнать больше – snyat lomku novosibirsk

После обращения в клинику «Основа» наш специалист незамедлительно выезжает для оказания экстренной медицинской помощи в Новосибирске. Процесс установки капельницы предусматривает комплексную диагностику и последующее детоксикационное лечение, что позволяет снизить токсическую нагрузку и стабилизировать состояние пациента. Описание процедуры включает следующие этапы:

Получить дополнительную информацию – вызвать капельницу от запоя

Процесс начинается с вызова врача или доставки пациента в клинику. После прибытия специалист проводит первичную диагностику: измерение давления, температуры, пульса, уровня кислорода в крови, визуальная оценка степени возбуждения или угнетения сознания. Собирается краткий анамнез: какой наркотик принимался, как долго, были ли сопутствующие заболевания.

Изучить вопрос глубже – снятие наркологической ломки на дому в подольске

Поэтому наша служба экстренного выезда работает круглосуточно. Медицинская бригада приезжает на вызов в любой район Балашихи в течение часа. Пациенту ставят капельницы, стабилизируют давление, снимают судорожный синдром и устраняют тревожность. Всё это проходит под наблюдением опытных врачей, которые ежедневно сталкиваются с острыми ситуациями и знают, как действовать быстро и безопасно.

Подробнее можно узнать тут – http://narkologicheskaya-pomoshch-balashiha1.ru

Ломка – это острый синдром отмены, возникающий при резком прекращении или снижении дозы алкоголя или других психоактивных веществ у хронически зависимых пациентов. Это состояние характеризуется выраженной физической и психической дискомфортностью, которая может сопровождаться сильными болевыми ощущениями, тревожностью, дрожью, потливостью, галлюцинациями и нарушениями сна. В критический момент ломка может привести к серьезным осложнениям, поэтому экстренная помощь нарколога имеет первостепенное значение для стабилизации состояния пациента и быстрого снятия симптомов.

Ознакомиться с деталями – снятие ломки на дому недорого

В клинике «Основа» применяется комплексный подход к лечению алкогольной интоксикации. Программа включает использование нескольких групп препаратов, каждая из которых решает конкретную задачу в процессе восстановления:

Подробнее тут – http://kapelnica-ot-zapoya-novosibirsk8.ru

Наркологическая клиника «НаркоМед Плюс» в Нижнем Новгороде оказывает экстренную помощь при снятии ломки. Наша команда высококвалифицированных специалистов готова круглосуточно выехать на дом или принять пациента в клинике, обеспечивая оперативное, безопасное и полностью конфиденциальное лечение. Мы разрабатываем индивидуальные программы терапии, учитывая историю зависимости и текущее состояние каждого пациента, что позволяет быстро стабилизировать его состояние и начать процесс полного выздоровления.

Разобраться лучше – snyatie lomki nizhnij novgorod

Необходимо незамедлительно обращаться за медицинской помощью, если у пациента наблюдаются следующие симптомы:

Получить больше информации – http://snyatie-lomki-novosibirsk8.ru/snyatie-lomki-na-domu-v-novosibirske/

Необходимо незамедлительно обращаться за медицинской помощью, если у пациента наблюдаются следующие симптомы:

Изучить вопрос глубже – https://snyatie-lomki-novosibirsk8.ru/snyatie-narkoticheskoj-lomki-v-novosibirske/

Процесс лечения включает несколько ключевых этапов, каждый из которых имеет решающее значение для восстановления организма:

Получить дополнительные сведения – https://snyatie-lomki-novosibirsk8.ru/snyatie-lomki-na-domu-v-novosibirske/

В стационаре работают узкопрофильные специалисты: наркологи, неврологи, психотерапевты, а также персонал, обеспечивающий круглосуточный уход. Программа включает медикаментозное лечение, психологическую коррекцию, восстановление сна, устранение депрессии, обучение саморегуляции и работу с мотивацией.

Углубиться в тему – платная наркологическая помощь балашиха

Наши специалисты оказывают экстренную помощь по четко отработанной методике, главная задача которой – оперативное снятие симптомов острой интоксикации и абстинентного синдрома, восстановление работы внутренних органов и создание оптимальных условий для последующей реабилитации. Опытный нарколог на дому проведет тщательную диагностику, составит индивидуальный план лечения и даст необходимые рекомендации по дальнейшему выздоровлению.

Подробнее можно узнать тут – нарколог на дом цена нижний новгород

Функция

Получить больше информации – https://snyatie-lomki-nnovgorod8.ru/snyatie-narkoticheskoj-lomki-v-nnovgorode/

Зависимость — это системная проблема, которая требует последовательного и профессионального подхода. Обычные попытки «вылечиться дома» без медицинского сопровождения нередко заканчиваются срывами, ухудшением состояния и психологической деградацией. Клиника «Здоровье Плюс» в Балашихе предоставляет пациентам не просто разовое вмешательство, а выстроенную поэтапную программу, основанную на опыте и медицинских стандартах.

Изучить вопрос глубже – http://www.domen.ru

Одной из самых сильных сторон нашей клиники является оперативность. Мы понимаем, что при алкоголизме, наркомании и лекарственной зависимости часто требуются немедленные действия. Если человек находится в состоянии запоя, абстиненции или передозировки, промедление может привести к тяжёлым осложнениям или даже смерти.

Подробнее тут – https://narkologicheskaya-pomoshch-balashiha1.ru/narkologicheskaya-pomoshch-na-domu-v-balashihe

Назначение и действие

Подробнее – https://narcolog-na-dom-nnovgorod8.ru/zapoj-narkolog-na-dom-v-nnovgorode/

Процесс начинается с вызова врача или доставки пациента в клинику. После прибытия специалист проводит первичную диагностику: измерение давления, температуры, пульса, уровня кислорода в крови, визуальная оценка степени возбуждения или угнетения сознания. Собирается краткий анамнез: какой наркотик принимался, как долго, были ли сопутствующие заболевания.

Разобраться лучше – снятие ломки на дому цена

Ломка — это не временное недомогание. Это системное разрушение организма, связанное с тем, что он перестаёт получать наркотик, к которому уже привык. Нарушается обмен веществ, функции сердца, печени, почек, теряется контроль над эмоциями и болью. В состоянии абстиненции человек не может спать, есть, адекватно мыслить. Страдает и тело, и психика.

Разобраться лучше – снятие ломки на дому московская область

В стационаре работают узкопрофильные специалисты: наркологи, неврологи, психотерапевты, а также персонал, обеспечивающий круглосуточный уход. Программа включает медикаментозное лечение, психологическую коррекцию, восстановление сна, устранение депрессии, обучение саморегуляции и работу с мотивацией.

Ознакомиться с деталями – https://narkologicheskaya-pomoshch-balashiha1.ru/kruglosutochnaya-narkologicheskaya-pomoshch-v-balashihe/

Одной из самых сильных сторон нашей клиники является оперативность. Мы понимаем, что при алкоголизме, наркомании и лекарственной зависимости часто требуются немедленные действия. Если человек находится в состоянии запоя, абстиненции или передозировки, промедление может привести к тяжёлым осложнениям или даже смерти.

Углубиться в тему – https://narkologicheskaya-pomoshch-balashiha1.ru/

Наркологическая клиника «Эдельвейс» в Екатеринбурге специализируется на оказании оперативной и квалифицированной помощи при снятии ломки. Наши специалисты обладают многолетним опытом работы и применяют современные методики для безопасного и эффективного лечения абстинентного синдрома. Мы работаем круглосуточно, что позволяет оказывать помощь в любое время суток, обеспечивая анонимность и конфиденциальность каждого пациента.

Изучить вопрос глубже – снятие ломки наркомана в екатеринбурге

facebook bm buy https://buy-business-manager-accounts.org/

tiktok ad accounts https://buy-tiktok-ads-account.org

buy tiktok business account https://tiktok-ads-account-buy.org

Зависимость — это системная проблема, которая требует последовательного и профессионального подхода. Обычные попытки «вылечиться дома» без медицинского сопровождения нередко заканчиваются срывами, ухудшением состояния и психологической деградацией. Клиника «Здоровье Плюс» в Балашихе предоставляет пациентам не просто разовое вмешательство, а выстроенную поэтапную программу, основанную на опыте и медицинских стандартах.

Подробнее тут – https://narkologicheskaya-pomoshch-balashiha1.ru/kruglosutochnaya-narkologicheskaya-pomoshch-v-balashihe

Этот информативный текст отличается привлекательным содержанием и актуальными данными. Мы предлагаем читателям взглянуть на привычные вещи под новым углом, предоставляя интересный и доступный материал. Получите удовольствие от чтения и расширьте кругозор!

Получить дополнительную информацию – https://ad-avenue.net/avantages-de-travailler-avec-une-agence-de-publicite

Эта информационная статья охватывает широкий спектр актуальных тем и вопросов. Мы стремимся осветить ключевые факты и события с ясностью и простотой, чтобы каждый читатель мог извлечь из нее полезные знания и полезные инсайты.

Выяснить больше – https://toyosatokinzoku.com/archives/241

Задача врачей — не просто облегчить симптомы, а купировать осложнения, стабилизировать жизненно важные функции, вернуть пациенту способность к дальнейшему лечению. Мы работаем быстро, анонимно и профессионально. Любой человек, оказавшийся в кризисе, может получить помощь в течение часа после обращения.

Изучить вопрос глубже – снятие ломки нарколог

Клиника «Возрождение» применяет комплексный подход к снятию ломки, используя современные детоксикационные методики и проверенные препараты. Приведенная ниже таблица демонстрирует основные группы медикаментов, используемых в терапии, и их назначение:

Исследовать вопрос подробнее – https://snyatie-lomki-novosibirsk8.ru/snyatie-lomki-na-domu-v-novosibirske

В этой публикации мы сосредоточимся на интересных аспектах одной из самых актуальных тем современности. Совмещая факты и мнения экспертов, мы создадим полное представление о предмете, которое будет полезно как новичкам, так и тем, кто глубоко изучает вопрос.

Углубиться в тему – https://dotiqo.com/understanding-seo-a-comprehensive-guide-to-search-engine-optimization

Ломка – это тяжелый синдром отмены, возникающий после длительного употребления алкоголя или наркотических веществ. При резком прекращении их приема нервная система и другие органы начинают страдать от недостатка необходимых веществ, что приводит к сильному дискомфорту, психоэмоциональным нарушениям и ухудшению общего состояния организма. В Новосибирске наркологическая клиника «Возрождение» оказывает экстренную помощь при снятии ломки, обеспечивая безопасное, круглосуточное и конфиденциальное лечение под наблюдением опытных специалистов.

Исследовать вопрос подробнее – снятие наркологической ломки на дому новосибирск

В клинике «Трезвая Жизнь» для эффективного вывода организма из запоя используется комплексный подход, который включает применение различных групп препаратов. Приведенная ниже таблица отражает основные компоненты нашей терапии и их функции:

Ознакомиться с деталями – вывод из запоя анонимно

Этот увлекательный информационный материал подарит вам массу новых знаний и ярких эмоций. Мы собрали для вас интересные факты и сведения, которые обогатят ваш опыт. Откройте для себя увлекательный мир информации и насладитесь процессом изучения!

Ознакомиться с деталями – https://otel.alansuites.com/94-of-consumers-say-your-website-must-be-easy-to-navigate

Этот информационный материал привлекает внимание множеством интересных деталей и необычных ракурсов. Мы предлагаем уникальные взгляды на привычные вещи и рассматриваем вопросы, которые волнуют общество. Будьте в курсе актуальных тем и расширяйте свои знания!

Разобраться лучше – https://school-of-cyber.com/portfolio/9

Эта статья предлагает захватывающий и полезный контент, который привлечет внимание широкого круга читателей. Мы постараемся представить тебе идеи, которые вдохновят вас на изменения в жизни и предоставят практические решения для повседневных вопросов. Читайте и вдохновляйтесь!

Получить больше информации – https://www.wesemannwidmark.se/2010/09/21/jag-ska-vara-med-i-metro

В этой статье представлен занимательный и актуальный контент, который заставит вас задуматься. Мы обсуждаем насущные вопросы и проблемы, а также освещаем истории, которые вдохновляют на действия и изменения. Узнайте, что стоит за событиями нашего времени!

Подробнее тут – https://marketinghospitalityco.com/?p=24514

Предлагаем вашему вниманию интересную справочную статью, в которой собраны ключевые моменты и нюансы по актуальным вопросам. Эта информация будет полезна как для профессионалов, так и для тех, кто только начинает изучать тему. Узнайте ответы на важные вопросы и расширьте свои знания!

Ознакомиться с деталями – https://freshwaterboats.com/ford-focus-production-for-north-america-will-cease-for-a-year-2

Эта информационная статья охватывает широкий спектр актуальных тем и вопросов. Мы стремимся осветить ключевые факты и события с ясностью и простотой, чтобы каждый читатель мог извлечь из нее полезные знания и полезные инсайты.

Изучить вопрос глубже – https://www.erdoganlargroup.com/?p=1

Алкогольный запой представляет собой крайне опасное состояние, когда организм переполнен токсинами, а системы внутреннего контроля практически перестают функционировать должным образом. Наркологическая клиника «Трезвая Жизнь» в Екатеринбурге оказывает экстренную помощь при выводе из запоя, используя передовые методы диагностики, детоксикации и поддерживающую терапию. Наша команда опытных врачей-наркологов готова оказать помощь в любое время суток, обеспечивая оперативное вмешательство и строгую анонимность каждого пациента.

Выяснить больше – вывод из запоя цена в екатеринбурге

Эта познавательная публикация погружает вас в море интересного контента, который быстро захватит ваше внимание. Мы рассмотрим важные аспекты темы и предоставим вам уникальныеInsights и полезные сведения для дальнейшего изучения.

Подробнее можно узнать тут – https://freshwaterboats.com/we-hear-audi-rs-models-could-be-offered-in-rear-wheel-drive-2

Эта статья предлагает уникальную подборку занимательных фактов и необычных историй, которые вы, возможно, не знали. Мы постараемся вдохновить ваше воображение и разнообразить ваш кругозор, погружая вас в мир, полный интересных открытий. Читайте и открывайте для себя новое!

Детальнее – https://cloudlab.tw/phidget%E4%BA%92%E5%8B%95%E6%84%9F%E6%87%89%E8%A3%9D%E7%BD%AE%E7%9A%84%E6%87%89%E7%94%A8/comment-page-825

В клинике «Трезвая Жизнь» для эффективного вывода организма из запоя используется комплексный подход, который включает применение различных групп препаратов. Приведенная ниже таблица отражает основные компоненты нашей терапии и их функции:

Изучить вопрос глубже – https://vyvod-iz-zapoya-ekb8.ru/vyvod-iz-zapoya-cena-v-ekb

Детоксикационные растворы (физиологический раствор, глюкоза, раствор Рингера)

Углубиться в тему – вывод из запоя цена

Срочный вызов врача на дом необходим при появлении следующих симптомов:

Получить дополнительные сведения – нарколог на дом цена нижний новгород

Клиника «Возрождение» применяет комплексный подход к снятию ломки, используя современные детоксикационные методики и проверенные препараты. Приведенная ниже таблица демонстрирует основные группы медикаментов, используемых в терапии, и их назначение:

Ознакомиться с деталями – снять ломку новосибирск

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me? https://www.binance.com/en-IN/register?ref=UM6SMJM3

Детоксикационные растворы (физиологический раствор, глюкоза, раствор Рингера)

Получить дополнительные сведения – нарколог вывод из запоя в екатеринбурге

Группа препаратов

Исследовать вопрос подробнее – http://snyatie-lomki-nnovgorod8.ru

Абстинентный синдром — одно из самых тяжёлых и опасных проявлений наркотической зависимости. Он развивается на фоне резкого отказа от приёма веществ и сопровождается сильнейшими нарушениями работы организма. Это состояние требует немедленного вмешательства. Самостоятельно справиться с ним невозможно — особенно если речь идёт о героине, метадоне, синтетических наркотиках или длительной зависимости. В клинике «НаркоПрофи» мы организовали систему снятия ломки в Подольске, работающую круглосуточно: как на дому, так и в условиях стационара.

Подробнее – снятие ломки

Срочный вызов врача на дом необходим при появлении следующих симптомов:

Исследовать вопрос подробнее – нарколог на дом клиника

Детоксикационные растворы (физиологический раствор, глюкоза, раствор Рингера)

Разобраться лучше – вывод из запоя на дому круглосуточно

Для обеспечения максимальной безопасности и эффективности лечения процесс организован в несколько этапов. При обращении пациента наши специалисты проводят первичный осмотр и диагностику, чтобы оценить степень интоксикации и выявить возможные осложнения. Далее назначается детоксикационная терапия, сопровождаемая медикаментозной поддержкой и психологическим консультированием. Основные этапы работы можно описать следующим образом:

Выяснить больше – наркологический вывод из запоя

Наркологическая клиника «Эдельвейс» в Екатеринбурге специализируется на оказании оперативной и квалифицированной помощи при снятии ломки. Наши специалисты обладают многолетним опытом работы и применяют современные методики для безопасного и эффективного лечения абстинентного синдрома. Мы работаем круглосуточно, что позволяет оказывать помощь в любое время суток, обеспечивая анонимность и конфиденциальность каждого пациента.

Выяснить больше – снятие ломки наркомана

Именно поэтому так важно не терять время. Чем раньше пациент получает помощь, тем выше шансы избежать необратимых последствий и вернуться к нормальной жизни.

Детальнее – снятие ломки московская область

При обращении за экстренной помощью наш нарколог незамедлительно выезжает на дом или принимает пациента в клинике. Процесс лечения организован по проверенной схеме, позволяющей максимально быстро стабилизировать состояние и облегчить симптомы ломки. Основные этапы включают:

Подробнее – http://snyatie-lomki-nnovgorod8.ru

– Судорожный синдром, дыхательная недостаточность – Острые панические атаки и психозы – Инфаркт, инсульт, аритмия – Дегидратация и почечная недостаточность – Суицидальные мысли, агрессия, саморазрушение

Изучить вопрос глубже – https://snyatie-lomki-podolsk1.ru/snyatie-lomki-narkolog-v-podolske

tiktok agency account for sale https://tiktok-ads-account-for-sale.org

tiktok ads account for sale https://tiktok-agency-account-for-sale.org

Задача врачей — не просто облегчить симптомы, а купировать осложнения, стабилизировать жизненно важные функции, вернуть пациенту способность к дальнейшему лечению. Мы работаем быстро, анонимно и профессионально. Любой человек, оказавшийся в кризисе, может получить помощь в течение часа после обращения.

Подробнее можно узнать тут – снятие ломки на дому цена

При поступлении вызова наши опытные специалисты незамедлительно приступают к комплексной диагностике состояния пациента. Первая задача – оценить уровень интоксикации и тяжесть симптомов, что включает измерение показателей артериального давления, пульса, температуры тела, а также уровня насыщения крови кислородом. На основании собранных данных формируется индивидуальная схема лечения, которая направлена на быстрое снятие симптомов ломки и стабилизацию нервной и иммунной систем.

Ознакомиться с деталями – снятие ломки новосибирск

tiktok agency account for sale buy tiktok business account

При наличии этих симптомов организм находится в критическом состоянии, и любой промедление с вызовом врача может привести к развитию серьезных осложнений, таких как сердечно-сосудистые нарушения, тяжелые неврологические симптомы или даже жизнеугрожающие состояния. Экстренное вмешательство позволяет не только снять острые симптомы ломки, но и предотвратить необратимые изменения в организме.

Выяснить больше – снятие наркотической ломки

При поступлении вызова наши опытные специалисты незамедлительно приступают к комплексной диагностике состояния пациента. Первая задача – оценить уровень интоксикации и тяжесть симптомов, что включает измерение показателей артериального давления, пульса, температуры тела, а также уровня насыщения крови кислородом. На основании собранных данных формируется индивидуальная схема лечения, которая направлена на быстрое снятие симптомов ломки и стабилизацию нервной и иммунной систем.

Получить больше информации – снятие ломки на дому в новосибирске

Наши специалисты оказывают экстренную помощь по четко отработанной методике, главная задача которой – оперативное снятие симптомов острой интоксикации и абстинентного синдрома, восстановление работы внутренних органов и создание оптимальных условий для последующей реабилитации. Опытный нарколог на дому проведет тщательную диагностику, составит индивидуальный план лечения и даст необходимые рекомендации по дальнейшему выздоровлению.

Подробнее тут – http://narcolog-na-dom-nnovgorod8.ru/vyzov-narkologa-na-dom-v-nnovgorode/

В клинике «Трезвая Жизнь» для эффективного вывода организма из запоя используется комплексный подход, который включает применение различных групп препаратов. Приведенная ниже таблица отражает основные компоненты нашей терапии и их функции:

Ознакомиться с деталями – вывод из запоя на дому цена

Наши специалисты оказывают экстренную помощь по четко отработанной методике, главная задача которой – оперативное снятие симптомов острой интоксикации и абстинентного синдрома, восстановление работы внутренних органов и создание оптимальных условий для последующей реабилитации. Опытный нарколог на дому проведет тщательную диагностику, составит индивидуальный план лечения и даст необходимые рекомендации по дальнейшему выздоровлению.

Подробнее тут – https://narcolog-na-dom-nnovgorod8.ru/zapoj-narkolog-na-dom-v-nnovgorode/

Ломка – это острый синдром отмены, возникающий при резком прекращении или снижении дозы алкоголя или других психоактивных веществ у хронически зависимых пациентов. Это состояние характеризуется выраженной физической и психической дискомфортностью, которая может сопровождаться сильными болевыми ощущениями, тревожностью, дрожью, потливостью, галлюцинациями и нарушениями сна. В критический момент ломка может привести к серьезным осложнениям, поэтому экстренная помощь нарколога имеет первостепенное значение для стабилизации состояния пациента и быстрого снятия симптомов.

Получить дополнительные сведения – снять ломку в екатеринбурге

Для обеспечения максимальной безопасности и эффективности лечения процесс организован в несколько этапов. При обращении пациента наши специалисты проводят первичный осмотр и диагностику, чтобы оценить степень интоксикации и выявить возможные осложнения. Далее назначается детоксикационная терапия, сопровождаемая медикаментозной поддержкой и психологическим консультированием. Основные этапы работы можно описать следующим образом:

Узнать больше – вывод из запоя на дому цена

Задача врачей — не просто облегчить симптомы, а купировать осложнения, стабилизировать жизненно важные функции, вернуть пациенту способность к дальнейшему лечению. Мы работаем быстро, анонимно и профессионально. Любой человек, оказавшийся в кризисе, может получить помощь в течение часа после обращения.

Узнать больше – http://snyatie-lomki-podolsk1.ru

Клиника «Возрождение» применяет комплексный подход к снятию ломки, используя современные детоксикационные методики и проверенные препараты. Приведенная ниже таблица демонстрирует основные группы медикаментов, используемых в терапии, и их назначение:

Изучить вопрос глубже – снятие ломки нарколог новосибирск

Алкогольный запой представляет собой крайне опасное состояние, когда организм переполнен токсинами, а системы внутреннего контроля практически перестают функционировать должным образом. Наркологическая клиника «Трезвая Жизнь» в Екатеринбурге оказывает экстренную помощь при выводе из запоя, используя передовые методы диагностики, детоксикации и поддерживающую терапию. Наша команда опытных врачей-наркологов готова оказать помощь в любое время суток, обеспечивая оперативное вмешательство и строгую анонимность каждого пациента.

Выяснить больше – http://vyvod-iz-zapoya-ekb8.ru/vyvod-iz-zapoya-na-domu-v-ekb/

Немедленный вызов врача необходим, если наблюдаются следующие симптомы:

Получить дополнительную информацию – снятие наркотической ломки нижний новгород

Для обеспечения максимальной безопасности и эффективности лечения процесс организован в несколько этапов. При обращении пациента наши специалисты проводят первичный осмотр и диагностику, чтобы оценить степень интоксикации и выявить возможные осложнения. Далее назначается детоксикационная терапия, сопровождаемая медикаментозной поддержкой и психологическим консультированием. Основные этапы работы можно описать следующим образом:

Получить дополнительную информацию – вывод из запоя екатеринбург

Наркологическая клиника «НаркоМед Плюс» в Нижнем Новгороде оказывает экстренную помощь при снятии ломки. Наша команда высококвалифицированных специалистов готова круглосуточно выехать на дом или принять пациента в клинике, обеспечивая оперативное, безопасное и полностью конфиденциальное лечение. Мы разрабатываем индивидуальные программы терапии, учитывая историю зависимости и текущее состояние каждого пациента, что позволяет быстро стабилизировать его состояние и начать процесс полного выздоровления.

Получить больше информации – снятие ломки на дому цена

Группа препаратов

Получить больше информации – vyvod-iz-zapoya-kruglosutochno ekaterinburg

tiktok agency account for sale https://buy-tiktok-ads-accounts.org

Именно поэтому так важно не терять время. Чем раньше пациент получает помощь, тем выше шансы избежать необратимых последствий и вернуться к нормальной жизни.

Ознакомиться с деталями – снятие ломки на дому московская область

В клинике «Трезвая Жизнь» для эффективного вывода организма из запоя используется комплексный подход, который включает применение различных групп препаратов. Приведенная ниже таблица отражает основные компоненты нашей терапии и их функции:

Изучить вопрос глубже – narkolog-vyvod-iz-zapoya ekaterinburg

Процесс начинается с вызова врача или доставки пациента в клинику. После прибытия специалист проводит первичную диагностику: измерение давления, температуры, пульса, уровня кислорода в крови, визуальная оценка степени возбуждения или угнетения сознания. Собирается краткий анамнез: какой наркотик принимался, как долго, были ли сопутствующие заболевания.

Получить дополнительные сведения – https://snyatie-lomki-podolsk1.ru/snyatie-lomki-narkolog-v-podolske/

Абстинентный синдром — одно из самых тяжёлых и опасных проявлений наркотической зависимости. Он развивается на фоне резкого отказа от приёма веществ и сопровождается сильнейшими нарушениями работы организма. Это состояние требует немедленного вмешательства. Самостоятельно справиться с ним невозможно — особенно если речь идёт о героине, метадоне, синтетических наркотиках или длительной зависимости. В клинике «НаркоПрофи» мы организовали систему снятия ломки в Подольске, работающую круглосуточно: как на дому, так и в условиях стационара.

Детальнее – снятие ломки подольск

– Судорожный синдром, дыхательная недостаточность – Острые панические атаки и психозы – Инфаркт, инсульт, аритмия – Дегидратация и почечная недостаточность – Суицидальные мысли, агрессия, саморазрушение

Углубиться в тему – https://snyatie-lomki-podolsk1.ru/snyatie-lomki-narkolog-v-podolske/

Для обеспечения максимальной безопасности и эффективности лечения процесс организован в несколько этапов. При обращении пациента наши специалисты проводят первичный осмотр и диагностику, чтобы оценить степень интоксикации и выявить возможные осложнения. Далее назначается детоксикационная терапия, сопровождаемая медикаментозной поддержкой и психологическим консультированием. Основные этапы работы можно описать следующим образом:

Получить дополнительную информацию – вывод из запоя круглосуточно екатеринбург

Процесс начинается с вызова врача или доставки пациента в клинику. После прибытия специалист проводит первичную диагностику: измерение давления, температуры, пульса, уровня кислорода в крови, визуальная оценка степени возбуждения или угнетения сознания. Собирается краткий анамнез: какой наркотик принимался, как долго, были ли сопутствующие заболевания.

Подробнее можно узнать тут – снятие ломки наркомана подольск

Детоксикационные растворы (физиологический раствор, глюкоза, раствор Рингера)

Исследовать вопрос подробнее – вывод из запоя недорого

Наши специалисты оказывают экстренную помощь по четко отработанной методике, главная задача которой – оперативное снятие симптомов острой интоксикации и абстинентного синдрома, восстановление работы внутренних органов и создание оптимальных условий для последующей реабилитации. Опытный нарколог на дому проведет тщательную диагностику, составит индивидуальный план лечения и даст необходимые рекомендации по дальнейшему выздоровлению.

Получить дополнительные сведения – врач нарколог на дом нижний новгород

Именно поэтому так важно не терять время. Чем раньше пациент получает помощь, тем выше шансы избежать необратимых последствий и вернуться к нормальной жизни.

Выяснить больше – http://snyatie-lomki-podolsk1.ru/snyatie-lomki-narkolog-v-podolske/

Именно поэтому так важно не терять время. Чем раньше пациент получает помощь, тем выше шансы избежать необратимых последствий и вернуться к нормальной жизни.

Подробнее – снятие ломки на дому цена

Назначение и действие

Получить дополнительные сведения – vrach-narkolog-na-dom nizhnij novgorod

Наркологическая клиника «Эдельвейс» в Екатеринбурге специализируется на оказании оперативной и квалифицированной помощи при снятии ломки. Наши специалисты обладают многолетним опытом работы и применяют современные методики для безопасного и эффективного лечения абстинентного синдрома. Мы работаем круглосуточно, что позволяет оказывать помощь в любое время суток, обеспечивая анонимность и конфиденциальность каждого пациента.

Получить дополнительные сведения – снятие наркологической ломки на дому в екатеринбурге

Процесс лечения включает несколько ключевых этапов, каждый из которых имеет решающее значение для восстановления организма:

Подробнее – снятие ломок на дому в новосибирске

В клинике «Трезвая Жизнь» для эффективного вывода организма из запоя используется комплексный подход, который включает применение различных групп препаратов. Приведенная ниже таблица отражает основные компоненты нашей терапии и их функции:

Получить дополнительную информацию – вывод из запоя цена екатеринбург.

Симптоматика ломки может варьироваться: от бессонницы, сильной тревожности и раздражительности до выраженных физически болезненных ощущений, таких как мышечные спазмы, судороги, потливость, головокружение и тошнота. В критических ситуациях, когда симптомы достигают остроты, своевременная медицинская помощь становится жизненно необходимой для предотвращения осложнений и стабилизации состояния пациента.

Изучить вопрос глубже – снятие наркологической ломки новосибирск

Предлагаем вашему вниманию интересную справочную статью, в которой собраны ключевые моменты и нюансы по актуальным вопросам. Эта информация будет полезна как для профессионалов, так и для тех, кто только начинает изучать тему. Узнайте ответы на важные вопросы и расширьте свои знания!

Изучить вопрос глубже – http://twinfallsconventions.com/index.php/2015/07/06/hello-world

Эта статья предлагает захватывающий и полезный контент, который привлечет внимание широкого круга читателей. Мы постараемся представить тебе идеи, которые вдохновят вас на изменения в жизни и предоставят практические решения для повседневных вопросов. Читайте и вдохновляйтесь!

Подробнее тут – https://jogjaaudioschool.com/admin-earns-scholarship

Необходимо незамедлительно обращаться за медицинской помощью, если у пациента наблюдаются следующие симптомы:

Изучить вопрос глубже – снятие ломки на дому цена

Этот информативный материал предлагает содержательную информацию по множеству задач и вопросов. Мы призываем вас исследовать различные идеи и факты, обобщая их для более глубокого понимания. Наша цель — сделать обучение доступным и увлекательным.

Изучить вопрос глубже – http://quickmartonline.com/the-most-trending-mobile-accessories-in-2020

Эта разъяснительная статья содержит простые и доступные разъяснения по актуальным вопросам. Мы стремимся сделать информацию понятной для широкой аудитории, чтобы каждый смог разобраться в предмете и извлечь из него максимум пользы.

Подробнее можно узнать тут – https://onlypreds.com/greatest-stay-intercourse-cams-2022-5

Эта статья предлагает живое освещение актуальной темы с множеством интересных фактов. Мы рассмотрим ключевые моменты, которые делают данную тему важной и актуальной. Подготовьтесь к насыщенному путешествию по неизвестным аспектам и узнайте больше о значимых событиях.

Подробнее – https://redacaojuridica.com.br/o-emprego-de-o-mesmo-errou-danou-se

Этот информативный текст отличается привлекательным содержанием и актуальными данными. Мы предлагаем читателям взглянуть на привычные вещи под новым углом, предоставляя интересный и доступный материал. Получите удовольствие от чтения и расширьте кругозор!

Углубиться в тему – https://www.jakartabicara.com/2022/05/24/uptd-kependudukan-cibadak-siap-berikan-pelayanan-terbaik-terhadap-masyarakat

В этой статье собраны факты, которые освещают целый ряд важных вопросов. Мы стремимся предложить читателям четкую, достоверную информацию, которая поможет сформировать собственное мнение и лучше понять сложные аспекты рассматриваемой темы.

Изучить вопрос глубже – https://alled.sk

Этот информативный текст выделяется своими захватывающими аспектами, которые делают сложные темы доступными и понятными. Мы стремимся предложить читателям глубину знаний вместе с разнообразием интересных фактов. Откройте новые горизонты и развивайте свои способности познавать мир!

Выяснить больше – https://isiomaitaly.com/hello-world

После диагностики начинается активная фаза медикаментозного вмешательства. Современные препараты вводятся капельничным методом, что позволяет быстро снизить уровень токсинов в крови, восстановить нормальные обменные процессы и стабилизировать работу жизненно важных органов, таких как печень, почки и сердце.

Изучить вопрос глубже – https://vyvod-iz-zapoya-murmansk0.ru/vyvod-iz-zapoya-czena-murmansk

Выезд врача на дом позволяет провести детоксикацию в спокойной обстановке. Врач привозит с собой препараты, капельницы, измерительное оборудование и проводит лечение в течение 1–2 часов. Такой формат подходит при стабильном состоянии и желании сохранить анонимность.

Изучить вопрос глубже – https://narko-zakodirovan.ru/vyvod-iz-zapoya-kapelnicza-spb

Как отмечает главный врач клиники, кандидат медицинских наук Сергей Иванов, «мы создали систему, при которой пациент получает помощь в течение часа — независимо от дня недели и времени суток. Это принципиально меняет шансы на выздоровление».

Получить дополнительную информацию – http://narkologicheskaya-pomoshch-balashiha1.ru/skoraya-narkologicheskaya-pomoshch-v-balashihe/https://narkologicheskaya-pomoshch-balashiha1.ru

Каждый врач клиники обладает глубокими знаниями в области фармакологии, психофармакологии и психотерапии. Они регулярно посещают профессиональные конференции, семинары и мастер-классы, чтобы быть в курсе последних достижений в лечении зависимостей. Такой подход позволяет нашим специалистам применять наиболее эффективные и научно обоснованные методы терапии.

Выяснить больше – https://нарко-фильтр.рф/vivod-iz-zapoya-anonimno-v-rostove-na-donu/

Каждый врач клиники обладает глубокими знаниями в области фармакологии, психофармакологии и психотерапии. Они регулярно посещают профессиональные конференции, семинары и мастер-классы, чтобы быть в курсе последних достижений в лечении зависимостей. Такой подход позволяет нашим специалистам применять наиболее эффективные и научно обоснованные методы терапии.

Получить дополнительную информацию – http://алко-лечение24.рф/

Алкогольный запой – это критическое состояние, возникающее при длительном злоупотреблении спиртными напитками, когда организм насыщается токсинами и его жизненно важные системы (сердечно-сосудистая, печёночная, нервная) начинают давать сбой. В такой ситуации необходимо незамедлительное вмешательство специалистов для предотвращения тяжелых осложнений и спасения жизни. Наркологическая клиника «Основа» в Новосибирске предоставляет экстренную помощь с помощью установки капельницы от запоя, позволяющей оперативно вывести токсины из организма, стабилизировать внутренние процессы и создать условия для последующего качественного восстановления.

Изучить вопрос глубже – врач на дом капельница от запоя в новосибирске

Наши наркологи придерживаются принципов уважительного и внимательного отношения к пациентам, создавая атмосферу доверия. Они проводят детальное обследование, выявляют коренные причины зависимости и разрабатывают индивидуальные стратегии лечения. Профессионализм и компетентность врачей являются ключевыми факторами успешного восстановления пациентов.

Получить дополнительную информацию – http://

Процесс начинается с вызова врача или доставки пациента в клинику. После прибытия специалист проводит первичную диагностику: измерение давления, температуры, пульса, уровня кислорода в крови, визуальная оценка степени возбуждения или угнетения сознания. Собирается краткий анамнез: какой наркотик принимался, как долго, были ли сопутствующие заболевания.

Узнать больше – снятие наркотической ломки подольск

Профессиональная помощь при запое необходима, если:

Детальнее – https://narko-zakodirovan.ru/vyvod-iz-zapoya-czena-spb

Процесс лечения капельничным методом от запоя организован по четко структурированной схеме, позволяющей обеспечить оперативное и безопасное восстановление организма.

Разобраться лучше – posle-kapelniczy-ot-zapoya arhangel’sk

Именно поэтому так важно не терять время. Чем раньше пациент получает помощь, тем выше шансы избежать необратимых последствий и вернуться к нормальной жизни.

Получить дополнительную информацию – снять ломку подольск

Наркологическая клиника “Путь к выздоровлению” — специализированное медицинское учреждение, предназначенное для оказания помощи лицам, страдающим от алкогольной и наркотической зависимости. Наша основная задача — предоставить эффективные методы лечения и поддержку, чтобы помочь пациентам преодолеть пагубное пристрастие и вернуть их к здоровой и полноценной жизни.

Исследовать вопрос подробнее – http://нарко-фильтр.рф/vivod-iz-zapoya-anonimno-v-rostove-na-donu/

Наркологическая клиника “Путь к выздоровлению” — специализированное медицинское учреждение, предназначенное для оказания помощи лицам, страдающим от алкогольной и наркотической зависимости. Наша основная задача — предоставить эффективные методы лечения и поддержку, чтобы помочь пациентам преодолеть пагубное пристрастие и вернуть их к здоровой и полноценной жизни.

Детальнее – https://нарко-фильтр.рф/vivod-iz-zapoya-v-stacionare-v-rostove-na-donu/

В стационаре работают узкопрофильные специалисты: наркологи, неврологи, психотерапевты, а также персонал, обеспечивающий круглосуточный уход. Программа включает медикаментозное лечение, психологическую коррекцию, восстановление сна, устранение депрессии, обучение саморегуляции и работу с мотивацией.

Углубиться в тему – http://narkologicheskaya-pomoshch-balashiha1.ru/skoraya-narkologicheskaya-pomoshch-v-balashihe/https://narkologicheskaya-pomoshch-balashiha1.ru

После обращения в клинику «Основа» наш специалист незамедлительно выезжает для оказания экстренной медицинской помощи в Новосибирске. Процесс установки капельницы предусматривает комплексную диагностику и последующее детоксикационное лечение, что позволяет снизить токсическую нагрузку и стабилизировать состояние пациента. Описание процедуры включает следующие этапы:

Углубиться в тему – https://kapelnica-ot-zapoya-novosibirsk8.ru/

После диагностики начинается активная фаза медикаментозного вмешательства. Современные препараты вводятся капельничным методом, что позволяет быстро снизить уровень токсинов в крови, восстановить нормальные обменные процессы и стабилизировать работу жизненно важных органов, таких как печень, почки и сердце.

Разобраться лучше – вывод из запоя круглосуточно

Обращение к наркологу позволяет избежать типичных ошибок самолечения и достичь стабильного результата. Квалифицированное вмешательство:

Выяснить больше – вывод из запоя новосибирск.

Наркологическая клиника “Путь к выздоровлению” — специализированное медицинское учреждение, предназначенное для оказания помощи лицам, страдающим от алкогольной и наркотической зависимости. Наша основная задача — предоставить эффективные методы лечения и поддержку, чтобы помочь пациентам преодолеть пагубное пристрастие и вернуть их к здоровой и полноценной жизни.

Исследовать вопрос подробнее – http://нарко-фильтр.рф

tiktok ads account for sale https://buy-tiktok-business-account.org

tiktok ad accounts https://buy-tiktok-ads.org

Наркологическая клиника “Путь к выздоровлению” — специализированное медицинское учреждение, предназначенное для оказания помощи лицам, страдающим от алкогольной и наркотической зависимости. Наша основная задача — предоставить эффективные методы лечения и поддержку, чтобы помочь пациентам преодолеть пагубное пристрастие и вернуть их к здоровой и полноценной жизни.

Подробнее можно узнать тут – https://нарко-фильтр.рф/vivod-iz-zapoya-v-kruglosutochno-v-rostove-na-donu/

Процесс лечения капельничным методом от запоя организован по четко структурированной схеме, позволяющей обеспечить оперативное и безопасное восстановление организма.

Изучить вопрос глубже – капельница от запоя в архангельске

Процесс начинается с вызова врача или доставки пациента в клинику. После прибытия специалист проводит первичную диагностику: измерение давления, температуры, пульса, уровня кислорода в крови, визуальная оценка степени возбуждения или угнетения сознания. Собирается краткий анамнез: какой наркотик принимался, как долго, были ли сопутствующие заболевания.

Получить дополнительные сведения – ломка от наркотиков в подольске

buy tiktok ads accounts https://tiktok-ads-agency-account.org

Алкогольный запой требует не просто прекращения приёма спиртного, а комплексной медицинской помощи. В Санкт-Петербурге и Ленинградской области вывод из запоя осуществляется опытными наркологами с применением современных методик детоксикации. В зависимости от состояния пациента лечение может быть организовано как на дому, так и в стационарных условиях. Главная цель — безопасное очищение организма и возвращение к стабильному физическому и психоэмоциональному состоянию.

Подробнее можно узнать тут – вывод из запоя капельница санкт-петербург

Врачебный состав клиники “Путь к выздоровлению” состоит из высококвалифицированных специалистов в области наркологии. Наши врачи-наркологи имеют обширный опыт работы с зависимыми пациентами и постоянно совершенствуют свои навыки.